Our last production, E – Departure, is completely music driven and provides some fast camera moves. To make those visually more interesting we use a motion blur, that we happen to be fairly happy with. This article will be about explaining how this motion blur post processing effect is achieved. The shader language used in the code sample is GLSL.

E – Departure

Motion blur in real life

A photo with motion blur

First, let’s take a moment to remind what motion blur physically is. When a photo or a video is shot with a camera, the exposure time (or shutter speed) is a parameter commanding how long the film, or sensor, will be exposed to incoming light. The longer it will, the more light will be caught by the sensor, thus the lighter the image will get, and the more blurry moving elements will get. This can be seen as a drawback (in a dark environment having crisp images gets more difficult) or as a wanted effect (since motion blur conveys a sense of speed).

While the shutter is open, the effect of light on the sensor is more or less constant: all things being equal, a light trail should have a constant lightness. In other words, it’s almost a box filter. I feel this point needs to be stated since some people confuse this effect with the artistic mean consisting of giving more contrast at the base of the trail than at the tail. In photography such an effect can be achieved by firing a flash right before the diaphragm closes (a technique known as rear curtain sync), but this is not the kind of motion effect I am referring to in this article.

Velocity map based motion blur

I’d say there are mainly three ways of achieving motion blur: stochastic based, accumulative based and velocity map based.

The stochastic approach consists in randomly sampling at different points in time and doing a proper weighted average. Trivial in ray tracing, this is difficult to achieve with rasterization but there is research in that direction. Insufficient sampling will lead to noise.

The accumulative motion blur consists in imitating the physical effect by blending together snapshots taken over the duration of the simulated aperture. Doing so is expensive since for each frame the geometry has to be processed and shaded. Insufficient sampling will lead to banding.

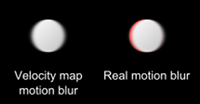

The velocity map based motion blur consists in rendering one image of the scene, no different from the regular rendering one would have without blur, and to make every point of this image bleed in some direction according to its speed. To do so, the speed of each point is computed and stored in a buffer: the velocity map. This technique will have the same banding artifacts as the accumulative approach, and some more. This is the technique we chose.

Making three educated steps away from reality

Bleeding is not motion blur

At this point of the description, the velocity map technique is already expected to give a result that is not matching reality. The reason simply being that having object colors bleeding over each other is not equivalent to the accumulation of light during an aperture. Let’s give a simple example to illustrate this.

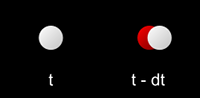

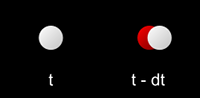

Viewer’s point of view

Imagine we have a black background, a static red sphere, and a moving white sphere passing in front of the red one. If the snapshot used for the final image is taken at the moment the white sphere is completely occluding the red one from the viewer point of view, the red sphere won’t contribute at all to the final image. With a camera taking a photo, there may be a part of the aperture duration when the red ball is still visible, hence contributing to the amount of light caught by the sensor. Accumulative motion blur will reproduce this effect while velocity map will not.

Expected resulting images

We know this is not accurate, but we are trading accuracy for speed, and as long as we don’t forget this and we accept the resulting quality this is ok. It is all a matter of balance between affordable and physically correct.

Linear motion versus arbitrary motion

Now another inaccuracy lies hidden in the velocity map technique description: the fact that we will make colors bleed in the direction of the speed. The wording suggests that the blur for a given point will always be linear. And this is what will be assumed, since it is way easier to store the representation of a linear move than any other kind of move. But then it means that points moving along a circle for instance, like with a rotation, will have a linear trail instead of a curved one. This approximation works as long as the amount of blur is supposed to be very limited compared to the path. But with elements making fast enough, like small circular moves, this will not work any more and glitches will become noticeable. Should this be a problem, I would suggest instead to store an instant center of rotation for example.

As long as we are using linear operations, we can also avoid computing the speed for each point, and instead just compute it for each vertex and interpolate between them. The benefit of doing so is obvious: the speed will be a varying value computed in the vertex shader, and available thereafter in the fragment shader.

Scatter versus gather

At last, let’s get even farther from reality for a technical reason, still trading accuracy for speed. As explained, we will have two images: a snapshot of the scene, and an image representing the speed of each point. Ideally, we would like to have each moving point bleed over its neighbors according to its speed. Unfortunately nowadays GPU are designed in a way so they can easily gather, but cannot scatter information: in a fragment shader, you can retrieve data from other fragments, but you cannot modify other fragments. So if you want to have one fragment affecting some other one, you have to read it from that fragment. And if you don’t know in advance which fragments are affected, which is the case here, for a given fragment you have to check all neighboring fragments and gather the ones affecting it. If a fragment might affect up to n fragments away, this mean having to check n² fragments, thus O(n²) (for more on this topic, see chapter 32 of the book GPU Gems 2). This quickly becomes very expensive.

So what we will do here is consider motion blur to be homogeneous enough so we can trade the scattering we would like to do for a simple gather operation. Instead of making our fragment bleed over, we will dilute it among other fragments. This is a strong assumption, that will often prove false. But fortunately, the average result will still look nice, even though some obvious artifacts will appear here and there.

Computing the velocity map

Let’s now get to the implementation part. I am supposing here we have some FBO (Frame Buffer Objects) ready, in order to be able to have efficient render to texture, or the equivalent if the API is not OpenGL. I won’t go into further details about this since it is quite a different topic from what is being discussed here. If you don’t know how to set up and use FBO, at least you now have the keywords and can Google it.

We will need a couple of things to build our velocity map. First we will need a separate buffer to store it. With OpenGL this is done with glDrawBuffers(), which allows to tell which buffers we are going to writen into. By default there is only one such buffer, but we can have various of them; here we just need an additional one. Second we will also need a way to compute the speed of the vertices. The motion blur is a screen space effect, so all we need is the speed in screen space.

During a typical forward rendering pass, each object will have some kind of matrice, pile of matrices, or any equivalent mean to compute its position in world coordinates. The camera is likely to have its own set of matrices, to transform points from world space to camera space, then from camera space to screen space (in OpenGL this last transformation is typically defined by the matrix referred by GL_PROJECTION_MATRIX). The position of a vertex at the given time t is therefore multiplied by each of those matrices to get the final position in screen space. To know the speed of such a vertex in screen space, we just need to know where it was at the time t – dt. If dt is exactly the duration of the aperture, the two positions will define the limits of the motion during that time. This is exactly what we want.

So basically we need a way to retrieve the transformation at t – dt while rendering at t. Using an animation, this shouldn’t be too difficult: you just have to query your system for t – dt. If your animation is ad hoc, like with some real-time interaction, then you may store the previous transformations. Anyway, as long as you have both the previous object transformation position and the previous camera position transformation, you have it all.

In a minimalistic vertex shader, one would have something like the following:

void main() {

gl_Position = gl_ProjectionModelViewMatrix * gl_Vertex; // equivalent to ftransform(), which is a deprecated function

}

For each object we will feed the shading pipeline with an additional information: the transformation matrix of t – dt. I considered that the projection matrix was unlikely to change fast enough to have any consequence on motion blur, so I decided not to store it, and to use the current one for both the old and the current position. It is up to you to choose your policy, but you have to be consistent when computing the speed in the end. Anyway, this leads to the following new vertex shader:

uniform mat4 oldTransformation;

varying vec2 speed;

vec2 getSpeed() {

vec4 oldScreenCoord = gl_ProjectionMatrix * oldTransformation * gl_Vertex;

vec4 newScreenCoord = gl_ProjectionMatrix * gl_ModelViewMatrix * gl_Vertex;

vec2 v = newScreenCoord.xy / newScreenCoord.w - oldScreenCoord.xy / oldScreenCoord.w;

return v;

}

void main() {

gl_Position = gl_ProjectionModelViewMatrix * gl_Vertex; // No change here speed = getSpeed();

}

You may notice that the current position is computed twice (gl_Position and newScreenCoord). I let it this way to make the code easier to read, since it seems the shader compiler will optimize this anyway. Also, you have to be careful about the homogeneous coordinates operation.

From now on let’s retrieve the interpolated speed in the fragment shader and finally store it in the velocity map. A typical fragment shader would look like this:

void main() {

gl_FragColor = /* whatever */

}

We are simply modifying it the following way:

varying vec2 speed;

vec3 getSpeedColor() {

return vec3(0.5 + 0.5 * speed, 0.);

}

void main() {

gl_FragData[0] = /* whatever the fragment color was */

gl_FragData[1] = getSpeedColor();

}

This is it. At this point we have a velocity map where color represents the motion of each point in screen space. So far so good.

Update: Actually, so far, not so good. The above code contains a bug that I didn’t notice at the time because we rarely met the conditions to make it visible. But since then it has been bugging me as we have been working on a scene that exhibits it pretty badly.

When polygons get clipped, it may lead to broken speed values, resulting in annoying strong blur artifacts. The reason behind this is the non linear operation consisting in dividing by the w component in the vertex shader, that brings wrong values after clipping.

To solve this, I suggest not dividing in the vertex shader and pass the w component to the fragment shader in order to divide only at that time.

Using the velocity map and applying the blur

Once the color buffer and velocity map are filled, the post processing pass will use both to generate the motion blurred image. This is done very simply: while in a very minimal blitting shader you would have something like the following,

uniform sampler2D colorBuffer;

void main() {

gl_FragColor = texture2D(colorBuffer, gl_TexCoord[0].xy);

}

here it becomes

uniform sampler2D colorBuffer;

uniform sampler2D velocityMap;

vec4 motionBlur(sampler2D color, sampler2D motion, vec2 uv, float intensity) {

vec2 speed = 2. * texture2D(motion, uv).rg - 1.;

vec2 offset = intensity * speed;

vec3 c = vec3(0.);

float inc = 0.1;

float weight = 0.;

for (float i = 0.; i <= 1.; i += inc) {

c += texture2D(color, uv + i * offset).rgb;

weight += 1.;

}

c /= weight;

return vec4(c, 1.);

}

void main() {

gl_FragColor = motionBlur(colorBuffer, velocityMap, gl_TexCoord[0].xy, 0.5);

}

In this example, the inc value will define a 10 fetches motion blur. It proved to be sufficient in our case, and even as little as 8 fetches could be enough for moderated blur. With 20 fetches it becomes really hard to notice artifacts, but it is also slower of course.

The function argument intensity controls the length of the motion trails. You can set it to be consistent with your rendering speed, or low for a fainter effect, of high for an exaggerated effect.

Dealing with precision matter

At this point, I had a nice looking motion blur already, but I noticed it tended to introduce instability at low speeds. This is due to the fact that low speed means short velocity vector, which being stored with two integers loses precision both in speed and direction. To overcome this issue, I decided to represent differently the velocity vector.

First, instead of storing its components, I stored the components of the normalized velocity vector on one side, and the norm on the other side. This dealt with the direction problem.

Second, I applied the very same trick gamma correction relies onto: using the power function to increase the precision of low values, at the cost of precision for high values. The norm would then be stored in non linear space, and transformed back when using it. Since varyings are interpolated linearly, we have no choice but stay in linear space in the vertex shader, and change for non linear space only in the fragment shader.

So in the vertex shader, once we have our per vertex speed vector we store it this way:

varying vec3 speed;

vec3 getSpeed() {

vec2 v = /* ... */

float norm = length(v);

return vec3(normalize(v), norm);

}

While in the fragment shader the color we write becomes:

vec3 getSpeedColor() {

return vec3(0.5 + 0.5 * vSpeed.xy, pow(vSpeed.z, 0.5));

}

During the postprocessing pass, the speed is then read this way:

vec3 speedInfo = texture2D(motion, uv).rgb;

vec2 speed = (2. * speedInfo.xy - 1.) * pow(speedInfo.z, 2.);

Update: again, for the reason mentioned above, this code has to be changed and norm has to be computed in the fragment shader. I am too lazy to fix the code here so this is let as an exercise to the reader. ;-)

Bugs and limitations

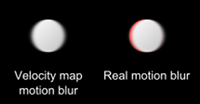

Motion blur artifacts

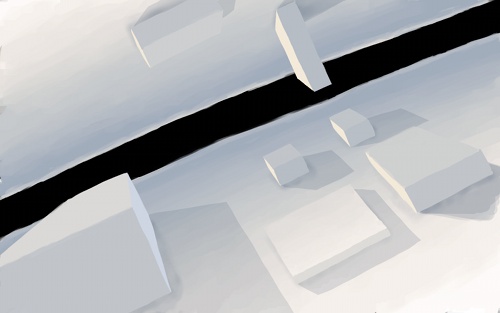

Just like said, each trade off introduces inaccuracies, that result in visual artifacts. The most important one is obviously the scatter vs gather one. You can see the visual cost implied here: notice the ghost effect visible on the edge of some elements.

Another problem is how to manage the edges of the image. When an object is moving near the border, the algorithm may try to fetch colors outside the image. In that case, I see three solutions: leaving it as is if this is fine in your case (it might simply not happen often enough to be a problem), clamping the fetch to the border (this is what is done in E – Departure; notice how it affects the right side of the sample screenshot), or generating a bigger image to have a thin space outside the displayed frame where colors can still be fetched.

Those problems though are difficult to notice at low speed, and will appear only briefly at higher speed. So for E – Departure, this was an acceptable trade off.

Conclusion

I presented here a technique fairly simple to implement, allowing to get an effect which is visually pleasing, and that noticeably increases the realism of the image for a limited cost. It played an important role in our demo; I hope you will find a good use for it too.

We have been taught that B – Incubation was nominated for the Breakthrough Performance category of the Scene.org Awards 2010. Needless to say this was an awesome news, that made our day (and probably the upcoming ones too; being nominated certainly does not mean actually winning the award, but that’s still quite something).

We have been taught that B – Incubation was nominated for the Breakthrough Performance category of the Scene.org Awards 2010. Needless to say this was an awesome news, that made our day (and probably the upcoming ones too; being nominated certainly does not mean actually winning the award, but that’s still quite something).